Social media is a double-edged sword. On one side, it empowers people to share opinions freely and connect across the globe. On the other, it has become a breeding ground for toxic behavior, abuse, and trolling. This is especially true in regions like Bangladesh, where millions communicate in a mix of English and Bangla; popularly known as “Banglish.” Traditional sentiment analysis models struggle with this linguistic blend, making it harder to filter harmful content effectively.

That’s where my research comes in.

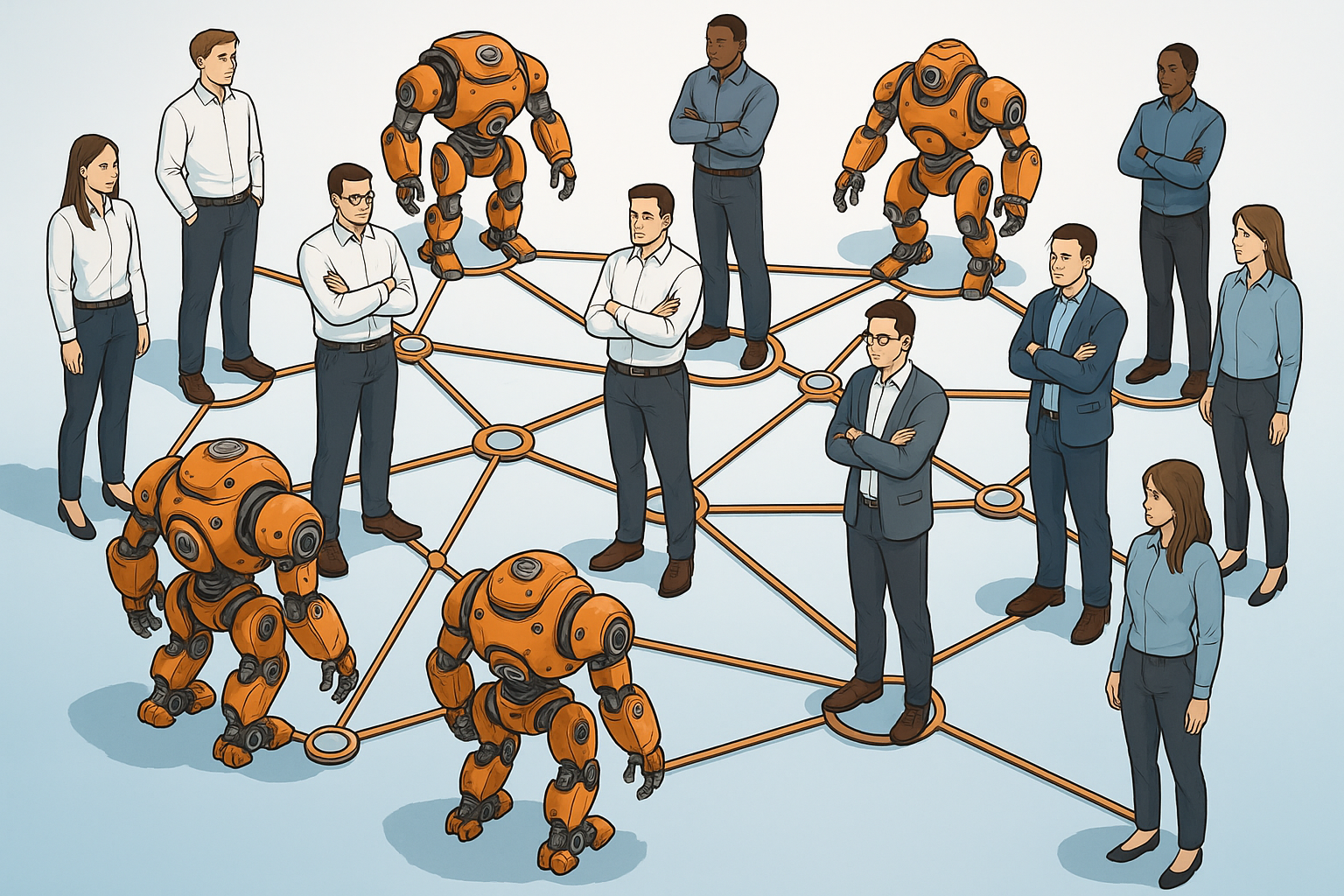

As part of my MSc thesis at United International University, I explored how machine learning and large language models (LLMs) can identify trolls and abusive comments in code-mixed text; specifically, in English, Bangla, and Banglish. Our aim was to create a safer digital space by building models that understand not just the words, but the emotions and intentions behind them, even in complex language formats.

The Problem

Banglish is not just a casual mix of English and Bangla. It often includes slang, sarcasm, regional expressions, and even emojis. For instance, a sarcastic “Great job ????” after a poor performance can easily be misclassified as positive by a traditional AI model. Add deception techniques like spelling variations (“f**k”, “fucc”, “phuck”) used to bypass moderation, and you have a serious challenge.

This multilingual chaos confuses most sentiment analysis tools, which are typically trained on English or pure Bangla. The result? Trolls and abusers slip through moderation nets, spreading hate, misinformation, and harassment; especially on platforms like Facebook, where over 47 million users in Bangladesh are active.

The Solution

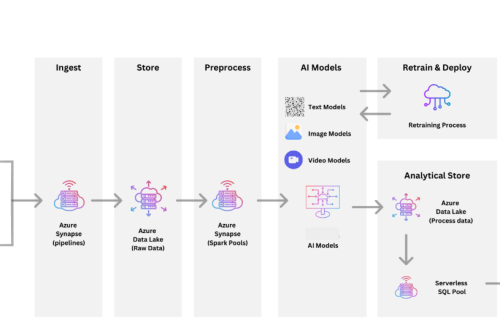

To address this, we built a pipeline using both classical machine learning and state-of-the-art LLMs like OpenAI’s GPT-4 and Google Gemini. Here’s how we approached it:

- Corpus Creation: We gathered 10,000 real comments from Facebook posts related to Bangladeshi cricket. These were manually labeled into three categories: Neutral/Positive, Negative, and Strong Negative (troll/abuse).

- Emoji and Deception Analysis: We didn’t ignore emojis we classified and analyzed them for sentiment. We also used embeddings to detect obfuscated abusive words, matching them against a vector database of known slurs and insults.

- Banglish Transliteration: A custom Banglish-to-Bangla transliterator helped convert mixed text into more analyzable form using a mix of ML and the Avro library.

- Model Fusion: Our core sentiment analysis model combined multilingual BERT for contextual understanding with a Graph Convolutional Network (GCN) for relationship awareness all wrapped in a dual attention mechanism to capture both structure and semantics.

- Code-Switching Awareness: We introduced an auxiliary task to predict how many times the language switches in a sentence, improving the model’s performance on mixed-language data.

The Results

Our proposed model achieved an impressive accuracy of 86.75% and a macro-average F1-score of 86.72% significantly outperforming traditional models like Naive Bayes and even LSTM-based architectures. The addition of GCN and code-switching awareness improved the model’s ability to correctly classify sarcastic or disguised abuse.

We also tested fine-tuned LLMs. Google Gemini slightly outperformed OpenAI’s GPT-4 in F1-score (82.6% vs. 77.7%), especially in identifying strong negative content. Gemini’s safety features even flagged extreme hate speech, which we treated as correct predictions.

What’s Next?

While our results are promising, challenges remain. LLMs sometimes misclassify nuanced content, and computational costs are high. In the future, we plan to:

- Reduce model bias in abusive detection

- Improve sarcasm detection using user history and thread context

- Deploy real-time moderation tools for social platforms

Final Thoughts

Social media should be a space for connection, not harassment. By combining linguistic insight, cultural awareness, and cutting-edge AI, we’ve taken a step toward making online interactions safer; especially in the often-ignored Banglish-speaking digital world.

Stay tuned as we work to scale this solution and release it for wider community use.